Overview

When I started doing research this summer with the Skidmore-APPSS collaboration, my greatest roadblock was handling data. There were dozens of spreadsheets containing extragalactic data, with cryptic column headers and millions of data entries with no inherent meaning to me.

Additionally, trying to access the full datasheets was a nightmare. Scattered across numerous websites and online databases, I had to parse through research papers to find the location of the publically available data.

Furthermore, most websites only hosted the files themselves, forcing you to download the entire spreadsheet instead of querying only the data needed.

Also, when working in collaboration with other researchers, I noticed that any time I tried to run their code, I would first have to change all references to file locations on local computers within the document, since everyone was downloading the entire spreadsheet onto their computer and reading it from there.

Finally, I realized that once my research was completed, I wanted it to be publicly available, and there was no way for me to do that with our current infastructure.

Starting in August, I took it upon myself to build an online hub for the Skidmore research group to share and manage spreadsheets for our data analysis projects.

Since all of our research is data driven, I wanted to create an API where my colleagues could access the same data, forgoing the need to store spreadsheets on their own computers (increasing code sharability), along with the ability to query exact data needed, without being proficient in SQL.

I also wanted to put what I learned in software engineering academic courses to the test, by building my own publicly consumed service that would make my research process easier, along with students for years to come.

As the project evolved, I also realized I could eventually use the website as a way to publicly host the Tendril Finder, my capstone astrophysics data science research project, currently in progress.

Authorization

Due to the API being publically available, not all endpoints are open for public consumption. Such routes are locked behind JWT authorization tokens, which require an account to obtain. Contact Us here to send us a message about making an account.

Once you register an account and password, go to the TODO: AUTHORIZE tab on the home page and sign in. You will be presented with a JWT access token, which you can copy and paste and use to unlock the protected routes in the API.

If you are consuming the API through a script, the general process is as follows:

# language: python

import requests

import pandas as pd

import io

url_signin = "https://68.9.221.13:3000/signin"

# compose your URL using the API and paste it here

url_fetch = "https://68.9.221.13:3000/tully-group"

# Create the payload using your email and password

r = requests.post(url_signin, data={'email': '', 'password': ''})

# Extract the JWT token from the response

token = r.json()['accessToken']

# GET request, all subsequent requests must include the Authorization: Bearer header

s = requests.get(url_fetch, headers={'Authorization': "Bearer {}".format(token)}).content

# If pandas has trouble reading the file, use this workaround

df = pd.read_csv(io.StringIO(s.decode('utf-8')))

Additionally, note that all users, authorized or not, are subject to a request rate limit, currently set to 10 requests per minute ( only applied to API routes)

Some Background

If you are comfortable with SQL, skip to here

If you are comfortable with internet protocols and the basics of APIs, skip to here.

For people new to consuming APIs, it is helpful to understand how the REST architectural style works, along with HTTP protocol in general.

REST APIs

REpresentational State Transfer is an architecture for building and consuming APIs, and is currently one of the most wide spread design frameworks for APIs

The core structure of a REST API lies in its resources. Accessing a resource is done through modifying a Universal Resource Identifiers (similar to a URL).

The URI contains the network location of the resource, along with necessary identifiers for accessing that resource.

Requests are made (usually over HTTP, but not necessarily) using a resource method. In the case of the Skidmore Catalog, these are the resource methods you will be working with:

- GET: This is the most common request header. The sole purpose of a GET request is to retrieve data.

- POST: Any time you are supplying information to a website, you are likely making a POST request. A POST request includes a response body, which consists of a map between fields and a response. For example, when you sign in to the Skidmore Catalog, you are sending a request with a body that looks like {email: "your email", password: "your password"}. Obviously, this allows the server to process that data you send, and send back a response accordingly.

Requests also contain headers, which include information about the request, such as authorization tokens

Responses also have headers, containing information about the server, along with a status code:

- 2**: Request processed successfully, response should be fine

- 4**: Request was not able to be processed due to an issue on the client's end (404: couldn't find resource you asked for, 403: forbidden due to authorization failure

- 5**: Refers to internal server errors

Finally, there is the response body, which is either HTML to be rendered by your browser, or in the case of the catalog API, raw csv data to work with.

Using the query builder

For relatively simple queries, you can select from a selection of drop-down fields to extract exactly the data you want from a resource.

For every resource, there is an endpoint that allows for a custom query. When you click "Try it out", there are 4 parameters to fill out:

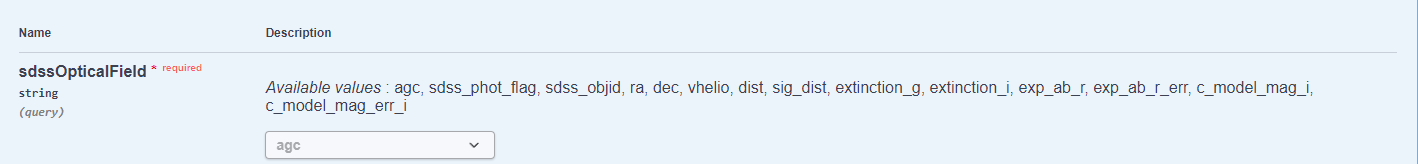

Field

The field parameter refers to the column that you want to apply a condition and a threshold to. For example, if we wanted to return the optical data from the SDSS catalog, for all galaxies farther away than some threshold, we would select the DIST field

You can also use the query builder to search by ID, by selecting the appropriate ID column from the field select drop down. In this case, we would select AGC

Consult the data sheets for more information about the meaning of the columns (along with their units, useful for the threshold parameter)

Condition

The condition parameter refers to the conditional you want to apply to your search

- gt: greater than

- gte: greater than or equal to

- lt: less than

- lte: less than or equal to

- equals: equals exactly

- not: not equal to

For example, when searching by ID, you would almost always use equals

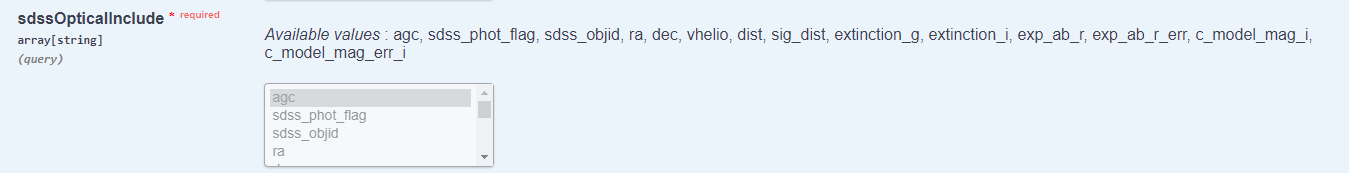

Include

The include parameter allows an array of columns to be selected. These are the columns that will be returned in the response payload

For example, if you wanted the entire data set, you would select all the fields (by dragging your mouse)

You can also use CTRL+CLICK to select single fields at a time

For example, if you only wanted to know the location of galaxies, you would use CTRL+CLICK to select agc, ra, dec (ID, celestial coordiantes)

Note, you don't necessarily have to return data that you are operating on. For example, if you wanted to search for a galaxy's information by ID, you don't have to return the ID in the payload.

Threshold

The threshold allows you to type a number that acts as the threshold that is compared against the conditional. For example, when searching by ID, this is where you would put the ID.

For thresholds pertaining to cosmological properties, make sure to consult the data sheets first so that your threshold makes sense (units)

SQL Queries

Sometimes, your query may be too complicated for the query builder. If we wanted to search using multiple columns and conditionals, you would need more precise control over your query.

Enter SQL, Structured Query Language

If you are proficient in SQL, there is an endpoint for every resource allowing for a custom SQL query

For this API, the only SQL allowed are WHERE statements. The SELECT (fields) statement is handled by the drop-down on the endpoint (check out the previous section for how to use that)

As an example, if we wanted to only return galaxies in a certain portion of the sky, we could use

-- language: SQL

WHERE

ra BETWEEN 0 AND 1

AND

dec BETWEEN 15 AND 20

Which can obviously be modified for any celestial coordinate and threshold

Note: SQL inputs are sanitized by prisma on the backend, but regardless, all SQL endpoints require authentication, due to possibilities of SQL injection

Retrieving Data

Once you have your query planned out (or if you just want to return all the data), how do you actually process that data?

One approach is to simply copy the response body from the swagger docs, and use that raw csv data wherever you need it

However, a faster and more appropriate method is to directly read from the URI into an object you can actually use

For this example, I'll be using Pandas, a Python data science library.

Step 1: Execute your query on the docs, and look through the response body to make sure it's what you wanted

Step 2: Copy the 'REQUEST URL' field from swagger responses block

Step 3: Read the data straight into a pandas dataframe:

# language: python

df = pd.read_csv("copied url")

With one line of code, you have a mutable form of the data to start analyzing.

Note: For endpoints that require authorization, there are a few more steps required to retrieve the data. See the example here

You can also use the auto-generated "Curl" to access the data remotely.

Tech Stack

For those that are interested, here are the technologies/frameworks I used for this project:

Web Development:

- TypeScript: An extension to JavaScript that is strongly typed, allowing me to use concepts from my Object Oriented Programming classes for web development

- NestJS: By far my favorite JavaScript framework, used to create a scalable, enterprise grade backend

- Docker: To containerize the entire application (and the database) so that it can be hosted anywhere, with ease

- PostgreSQL: A database to store necessary datasets, along with execute SQL searches

- Prisma: An ORM (object relational mapper) to handle database management and querying

- SwaggerUI: To build the API to OpenAPI specifications, along with providing an interactive documentation page to consume the API

- Bootstrap: To quickly create a simple UI

- PassportJS: To implement JWT authentication

- Webpack: To consolidate dozens of TypeScript files into a single JS file suitable for the web

- GitHub: To host the source code of the project, allowing it to be moved to new server environments

Tendril Finder:

- Python: The primary language for data science

- Pandas/Numpy/NetworkX/Scikit-Learn/Astropy/and more: Python packages commonly used for data science

- Jupyter Notebooks: For providing cohesive documentation to the various Python scripts used for the project

- Asana: For managing my research team